This was a project I did for a Reinforcement Learning course during my Msc study. In this project, I worked on training an RL agent to learn to play Blackjack, a popular game worldwide.

Blackjack is a simple game that I understand well. In addition, its action space is small (only a few actions such as Hit, Stand, Double-down, Split) and its state space is also small, making it a good candidate for a learning project about Q-Learning (tabular and Deep-Q).

Below are a few key notes:

In this project, I was able to extend the Blackjack toy environment by Gymanisum to support also Double-down and Split actions.

For Blackjack, the tabular Q-learning produced better policies across the board. I suspect that the Deep-Q version is probably an overkill for this game, and to produce good results further hyper-params adjustments would be needed.

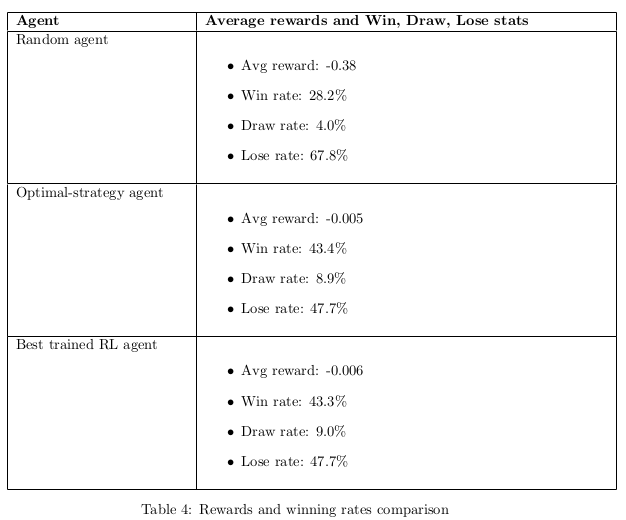

The best trained policies results are shown in the picture below (see Section 4.4 in the project report).

- One of the most interesting part when doing this training is to see how the agent’s policy evolves overtime. And that evolution is visualized and shown in Appendix A of the report.

You can read my full report below: