This was another project I did for an AI course during my Msc study. In this project, I decided to work on finetuning pretrained language models for multilingual Natural Language Inference (NLI).

The context and data for this project were based on this Kaggle competition.

You can read my project report in full here. Below are a few interesting takeaways:

This project was the first time I worked with Flan-T5 and mDeberta-V3 models. I’d recently heard about them and thought this would be a good chance to try them out. In this project, mDeberta-V3 performed very well, and consistently so across all evaluated languages (there were 15 of them). Surprisingly, Flan-T5, which was multilingual by pretraining, performed ok, but not as well as mDeberta. This was something I still haven’t known exactly why.

Another issue that’s still unknown was that somehow the finetuning didn’t work for XLM-Roberta, with which I had previously obtained decent finetuning results in some previous projects.

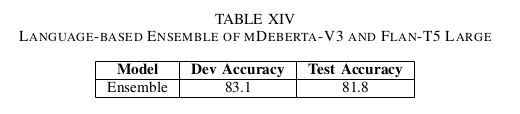

Apart from the above 2 points, everything went as expected. The best results were obtained using an ensemble of fintuned mDeberta-V3 and fintuned Flan-T5 Large. The picture below showed this result:

If you’re interested in this topic, the full report is embedded below: